Publications

Publications

Publications

Publications

Publications

Publications

Publications

Publications

At MIG 2019, I presented Robust Marker Trajectory Repair for MOCAP using Kinematic Reference, a paper on robust marker cleanup for motion-captured data.

Motion capture (MOCAP) is one of two main methods used for gathering animation data for games, the other being keyframing. Keyframing requires artists to manually creates frames for animated characters, which is a process that requires both expertise and time. MOCAP, on the other hand, allows actors to act out desired motions that are captured and transferred onto animated characters, immensely speeding up the process of gathering realistic motion data.

MOCAP itself can be segmented into two broad categories, marker-based and marker-less. While marker-less methods have recently enjoyed a surge of interest in both academia and industry startups, passive marker-based methods remain the standard to conduct high fidelity motion capture for use in AAA productions.

Despite their ubiquity within production, passive markers suffer from major issues that hinder their performance and result in a bottleneck effect for the entire pipeline. Markers can be obscured from view by props, limbs, and other characters. Since passive systems imply indistinguishability between individual markers, markers can swap when they pass close to each other. Camera noise can result in deviations between calculated marker positions and their respective ground truths. All these issues are traditionally solved by specially trained MOCAP artists, whose role is to clean marker data by directly editing marker paths, a time-consuming process. Automatic solutions to these issues can largely be classified into two levels based on which level they work at: marker level or kinematic level.

Marker level solutions attempt to fix marker paths directly by filling in marker gaps. Solutions such as spline filling function adequately for small missing marker segments but fail for larger gaps in marker data. Larger gaps are usually dealt with using reference markers, which can be set up manually for a given marker set or automatically computed. Marker-based solutions suffer from one common problem: a lack of kinematic cohesion. In most production pipelines, marker path data is not used directly. Rather, a solver generates a set of hierarchical joints which represent the marker motion in the kinematic space. It is possible for paths that appear valid in the marker space to result in a broken kinematic solution.

Kinematic level solutions address this by bypassing marker gap filling, directly generating a kinematic solution from the raw marker data. Recent advances in techniques have resulted in state-of-the-art performance by these methods. However, in the cases when these methods do fail, they become hard to integrate into existing pipelines. MOCAP artists who are used to fixing motion capture data by modifying marker paths can no longer fix the data as the marker paths are no longer available.

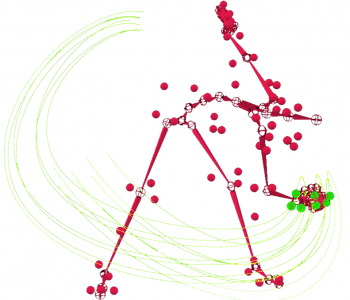

We propose a method that fixes this issue by merging both approaches. First, a state-of-the-art robust kinematic solver [Holden 2018] is used to generate a kinematic solution. A commercial solver which is not robust to marker noise is then used to generate a second kinematic solution, which can be viewed as a representation of the broken marker data on the kinematic domain. These two solutions are then compared on a per-joint basis to determine sections of broken marker data. Markers are then reconstructed from the robust kinematic solution using linear blend skinning. A blending algorithm is subsequently used to fix sections of the original marker path data using the reconstructed marker paths as reference. Finally, a kinematic solution is recomputed from the blended marker paths to validate the kinematic validity of our solution.

This method allows for easier integrability of state-of-the-art kinematic solutions into production pipelines. I’d like to thank everyone at Ubisoft LaForge and Ubisoft Alice who helped with this work. I’d like to specifically thank Daniel Holden for his tremendous support and my supervisor Tiberiu Popa. For more information, check out the full paper on the subject.